PVT encoder

use of transformer

For example, some works model the vision task as a dictionary lookup problem with learnable queries, and use the Transformer decoder as a task-specific head on top of the CNN backbone.

将视觉任务建模为一个具有可学习查询的字典查询问题,并将Transformer解码器作为CNN主干之上的特定任务头。

ViT

拥有圆柱形的粗略images patches as input

shortcomings:

- 输出特征图是单尺度而且底分辨率

- 计算花销大

PVT

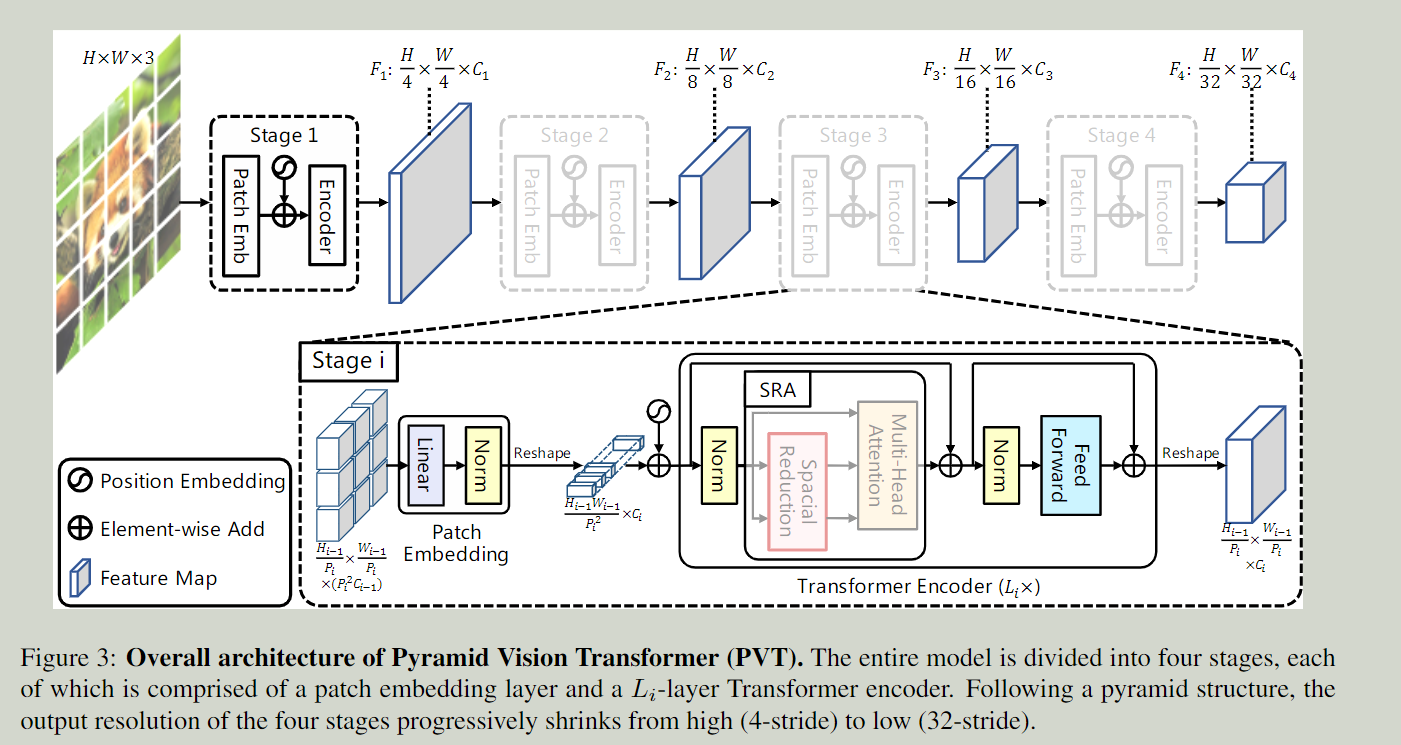

a pure transformer backbone

advantages:

- 细粒度(fine-grained)的图像patches 来学习高分辨率表征

- 引入一个逐渐减小的金字塔transformer的序列长度,减少计算量

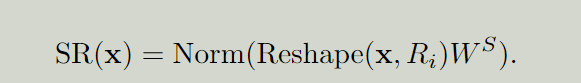

- 引入空间降低注意力层(spatial-reduce attention layer)降低在学习高分辨率特征图的开销

- 产生全局感受野(global reception field)

Feature Pyramid for Transformer

首先将输入特征图分为个patches,将每个patches展平之后并且将其映射到(projected to)维度的embedding,再经过一个线性映射之后,embedded patches可以被视为

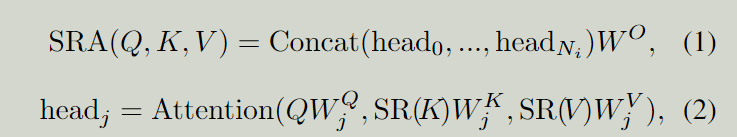

SRA ?

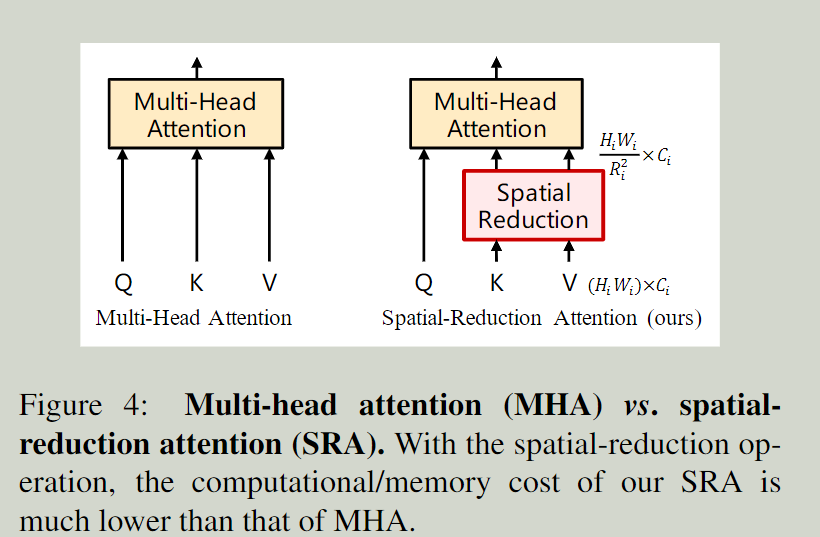

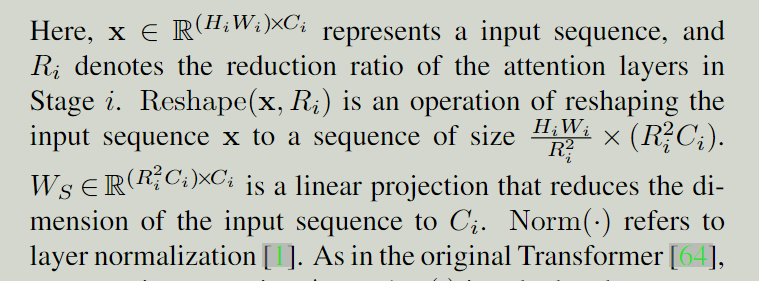

和MHA(multi-head attention)相似,receive Q K V,它在进行注意力机制之前减少了K和V的空间尺度,显著降低了计算和存贮

这里和原始transformer一样,只不过K和V先经过了一个空间降低操作。

hyper parameters:

the rule of ResNet:

- use small output channel numbers in shallow stages

- concentrate the major computation resource in intermediate stages.

- with the growth of network depth, the hidden dimension gradually increases, and the output resolution progressively shrinks

- the major computation resource is concentrated in Stage 3

the advantages over ViT:

- more flexible—can generate feature maps of different scales/channels in different stages

- more versatile(通用)—can be easily plugged and played in most downstream task models

- more friendly to computation/memory—can handle higher resolution feature maps or longer sequences

Reference:

全网最通俗易懂的 Self-Attention自注意力机制 讲解_self attention机制-CSDN博客

https://www.bilibili.com/video/BV15v411W78M/?spm_id_from=333.788

Pyramid Vision Transformer(PVT): 纯Transformer设计,用于密集预测的通用backbone-CSDN博客